February 12th, 2026

Big Data Analytics: Top 7 Tools and Best Practices in 2026

By Simon Avila · 21 min read

After testing many big data analytics tools, I found that the right platform comes down to how you query data and whether your team prefers code or natural conversation. Here are 7 tools that handle large datasets well, plus the best practices that make them work in 2026.

What are big data analytics tools?

Big data analytics tools are software platforms that collect, process, and analyze large datasets to reveal patterns and business insights. You connect data sources like databases, business applications, or files, then ask questions or set parameters. The platform processes your request and generates charts, dashboards, or reports that show patterns, trends, and outliers you can use to make decisions.

To reveal patterns and actionable insights, these tools use techniques like:

7 Best big data analytics tools: At a glance

Tool | Best For | Starting price | Key strength |

|---|---|---|---|

Teams that prefer no-code analysis | Natural language queries with AI-powered analysis | ||

Batch processing of massive datasets | Distributed storage across server clusters | ||

Executive reporting and presentations | $75/user/month for the Creator license | Drag-and-drop dashboard builder | |

Real-time analytics and ML workflows | In-memory processing for speed | ||

Teams using Office 365 and Azure | Native Microsoft ecosystem integration | ||

Cloud data warehousing at scale | Automatic scaling without infrastructure management | ||

Large-scale data analysis without setup | Serverless queries on petabyte data |

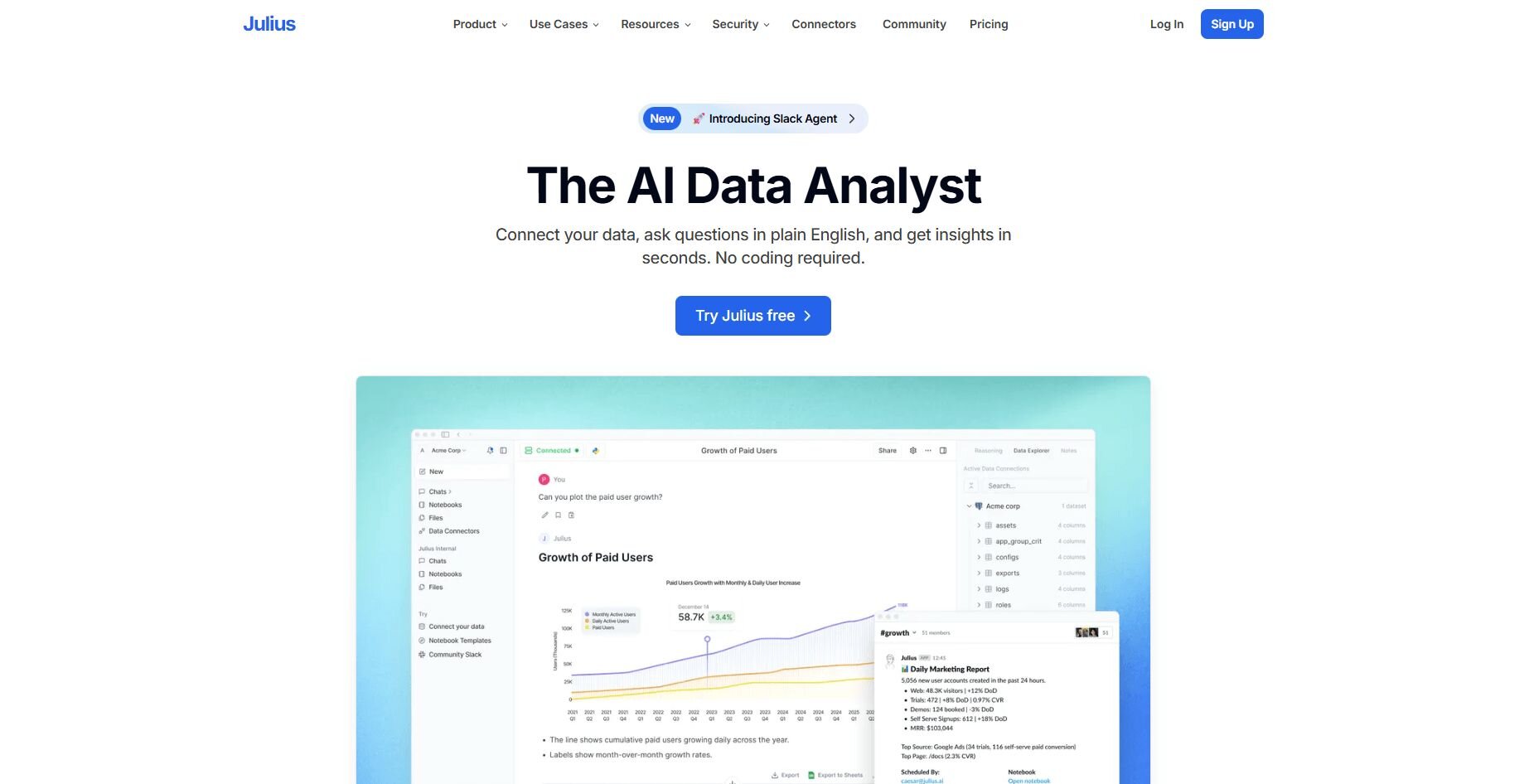

1. Julius: Best for no-code data analysis

We built Julius to let teams analyze data by asking questions instead of writing code. It connects to databases like Postgres, Snowflake, and BigQuery, plus business tools like Google Ads and Stripe. These connections let you analyze datasets too large for Excel or Google Sheets without moving data between systems.

Once connected, Julius learns your database structure and table relationships over time. This means it gives more accurate responses as you ask it more questions.

Julius handles both quick questions and repeatable analysis through its Notebooks feature. You can schedule reports to run automatically and deliver results to email or Slack, which works well for weekly dashboards or monthly summaries. The tool lets you hide code entirely if you prefer working through conversation, or access the semantic layer directly if you need technical control.

Julius starts at $37 per month.

2. Apache Hadoop: Best for batch processing of massive datasets

Apache Hadoop is an open-source framework that stores and processes large datasets across multiple servers instead of one machine. It works by having each server handle a portion of the data at the same time, then combining the results.

I tested Hadoop's distributed processing by running batch jobs on sample datasets to see how it handles workloads across server clusters. It handles data volumes that standard systems can't manage, but works best for scheduled jobs that process data overnight or weekly rather than real-time results. This makes it useful for log analysis, data warehousing, and historical reporting.

Apache Hadoop is free as an open-source project, though you need server space and technical skills to set it up.3. Tableau: Best for executive reporting and presentations

Tableau is a data visualization platform that connects to big data sources like Hadoop, Snowflake, and cloud warehouses to build interactive dashboards and charts. It works through a drag-and-drop interface where you connect data sources, select fields, and build visualizations without writing code.

While testing Tableau, I built dashboards to visualize patterns across large datasets. I found it works best when analysts handle data setup and calculations and stakeholders use dashboards to spot trends. This structure keeps reports consistent and makes insights easy to review in board meetings and executive updates.

Tableau starts at $75 per user per month for the Creator license, which includes full dashboard building capabilities.

4. Apache Spark: Best for real-time analytics and ML workflows

Apache Spark is an open-source processing engine that analyzes big data by keeping information in active memory rather than saving and reloading it at each step. This makes it faster than systems that constantly write data to storage during processing.

I tested Spark by running real-time data jobs and machine learning workflows on streaming data. Spark analyzes data as it arrives, instead of waiting hours to process it later. Because Spark works in memory, it can run complex calculations on large datasets without slowing down. This makes it useful for fraud detection, recommendation systems, and live analytics dashboards.

Apache Spark is free as an open-source project, though you need technical expertise and infrastructure to deploy it.

5. Microsoft Power BI: Best for teams using Office 365 and Azure

Microsoft Power BI is a business analytics platform that connects to big data sources, Azure data lakes, and SQL databases to create reports and dashboards. It works through a familiar Microsoft Office-style interface that many business users already understand.

During testing, I built dashboards for sales performance and customer analytics to see how Power BI handles large datasets. It queries big data sources directly instead of loading everything into memory, which keeps reports responsive even with growing data volumes.

The native integration with Microsoft tools means teams already using Office 365 can start analyzing big data without learning new platforms. This makes it useful for organizations that store data in Azure or SQL Server and need accessible analytics.

Power BI starts at $14 per user per month.6. Snowflake: Best for cloud data warehousing at scale

Snowflake is a cloud-based data warehouse that stores and processes large datasets without managing physical servers.

I loaded large datasets and ran complex queries to see how Snowflake handles scaling. The platform separates storage from computing, so you can run heavy analysis without impacting data availability.

Snowflake scales automatically when you run more queries, then shrinks back down when you need less. This works well for businesses with changing analysis needs, seasonal reports, and expanding data.

Snowflake uses usage-based pricing that charges for storage and computing separately. We also have an in-depth Snowflake pricing guide if you’d like to learn more.7. Google BigQuery: Best for large-scale data analysis without setup

Google BigQuery is a cloud data warehouse that runs queries on large datasets without requiring you to set up or manage servers. Google handles all the technical infrastructure automatically.

I ran sample queries analyzing sales trends and customer behavior to see how BigQuery handles analysis without setup. You write your query, submit it, and BigQuery processes the data without needing to configure servers or plan capacity ahead of time.

You pay only for what you use rather than maintaining infrastructure that sits idle between analysis runs. This makes it useful for teams that need to analyze large datasets quickly without technical overhead.

Google BigQuery uses usage-based pricing based on the amount of data you query and store.

Key features to look for in big data analytics tools

Big data analytics tools vary in what they offer, but certain features determine whether a platform handles your workload well or slows you down. Here are the components that matter most when comparing options:

Scalability: The tool should manage growing data volumes without slowing down. Look for platforms that add computing power automatically as your datasets grow instead of requiring manual adjustments.

Data connectivity: Strong connections to your existing data sources save time and reduce errors. I recommend testing how quickly a tool links to your specific databases, cloud storage, and business apps before you commit.

Processing speed: Fast results matter when teams need answers right away. Tools that work with data in memory or split processing across multiple systems typically deliver faster than those that rely on disk storage.

Visualization capabilities: Charts, dashboards, and reports should be simple to build and share. The best platforms let people create visuals without learning code or complex commands.

Security and compliance: Features like encryption, access controls, and activity logs protect sensitive information. I suggest choosing tools with certifications that match your industry standards.

Ease of use: Complicated tools slow down adoption across your team. Look for platforms with interfaces that people can learn quickly, whether through conversation, drag-and-drop design, or familiar query language.

Real-world use cases of big data analytics

Business teams use big data analytics solutions to answer questions like which customer segments drive the most revenue, where operational bottlenecks slow production, or which marketing channels deliver the best ROI. Here's how different departments apply these platforms in daily work:

Marketing: Marketing teams track campaign performance by linking data from Google Ads, email, and website analytics. This shows which efforts drive conversions and where the budget should shift. Customer segmentation shows buying habits, engagement, and demographics. This helps in targeting the right audiences.

Sales: Sales teams identify which leads convert fastest and which products customers buy together. They also spot which territories need attention. Pipeline analysis reveals where deals stall and which actions help prospects close.

Finance: Finance departments analyze historical sales, seasonal trends, and market conditions to forecast revenue. I've found that budget tracking becomes clearer when teams pull data from multiple cost centers. This helps spot spending patterns and areas that need adjustment.

Product: Product teams analyze which features get used most and where customers struggle. They also track what causes people to stick around or leave. I recommend using this data to guide what to build next based on actual behavior instead of assumptions.

Operations: Operations teams track inventory levels, shipping times, and supplier performance to catch problems before they halt production. Resource planning looks at utilization rates to predict when more capacity becomes necessary.

Best practices for effective big data analytics

Start with one department before rolling out company-wide

Pick a single team with clear data needs and a manageable scope for your first implementation. I've seen companies try to launch across all departments at once, which creates confusion about data ownership, conflicting requirements, and stalled adoption. A successful test in marketing or finance builds momentum and creates internal advocates who help with broader rollout.

Test queries on sample data before running on full datasets

Document your data structure as you learn it

Set query limits and alerts for cost control

Build repeatable templates instead of one-off analyses

Benefits of big data analytics

Big data analytics tools change how teams work by giving them access to insights that were previously out of reach or too slow to act on. Here are some of its benefits:

Less reliance on data teams for routine questions: Business users can now find answers about campaign performance, sales trends, or customer behavior on their own. This frees data specialists to focus on complex analysis instead of repetitive reporting.

Faster decision-making: You can respond to shifts in customer behavior, competitor moves, or economic trends while they still matter. I've seen marketing teams change budget allocation within hours based on current performance instead of waiting for monthly reports.

Discovery of revenue opportunities: Teams discover patterns in large datasets that small samples can't show. You can spot which customer segments spend the most over time or which products people buy together. These insights stay hidden in spreadsheets that can't handle enough data to reveal the pattern.

Better resource allocation: Teams can see which products, features, or services get used most and put budget where it matters. This beats making decisions based on guesses or small surveys that don't show what your full customer base actually does.

Competitive advantage: Companies that analyze data faster spot market opportunities, workflow problems, and customer needs before competitors do. This advantage grows over time as you keep learning from your expanding dataset.

Challenges of big data analytics

Big data analytics tools solve problems but can create new ones during implementation. Here are some of the challenges you may encounter:

Learning curve varies across teams: Non-technical users learn drag-and-drop tools quickly. Query-based platforms need SQL skills that take weeks or months to build. Some team members start right away, while others need training first.

Data silos prevent complete analysis: Customer data lives in your CRM, purchases sit in your payment system, and marketing numbers stay in Google Analytics. Connecting these takes API access, login details, and regular upkeep. I've seen teams spend months linking data sources.

Storage and computing costs grow quickly: Usage-based pricing seems flexible until your team runs more queries or stores more data. A tool costing a few hundred dollars monthly can jump to thousands. Cost tracking becomes its own job.

Inconsistent data quality creates wrong results: Missing information, duplicate entries, and format problems lead to bad analysis. Teams often find these issues after making decisions based on flawed reports. Cleaning and checking data becomes regular work.

Want big data analysis without the technical overhead? Try Julius

Big data analytics tools can overwhelm teams with setup complexity and technical barriers. Julius is an AI-powered data analysis tool that reduces those obstacles by connecting directly to databases like Postgres, Snowflake, and BigQuery, then letting you analyze data through natural language queries instead of code.

Here’s how Julius helps:

Built-in visualization: Get histograms, box plots, and bar charts on the spot instead of jumping into another tool to build them.

Quick single-metric checks: Ask for an average, spread, or distribution, and Julius shows you the numbers with an easy-to-read chart.

Catch outliers early: Julius highlights suspicious values and metrics that throw off your results, so you can make confident business decisions based on clean and trustworthy data.

Recurring summaries: Schedule analyses like weekly revenue or delivery time at the 95th percentile and receive them automatically by email or Slack.

Smarter over time with the Learning Sub Agent: Julius's Learning Sub Agent automatically learns your database structure, table relationships, and column meanings as you use it. With each query on connected data, it gets better at finding the right information and delivering faster, more accurate answers without manual configuration.

One-click sharing: Turn a thread of analysis into a PDF report you can pass along without extra formatting.

Ready to see how Julius can help your team make better decisions? Try Julius for free today.